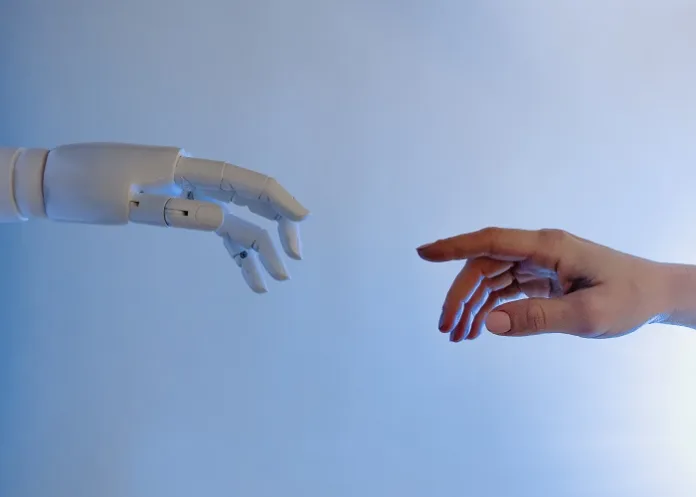

As people become increasingly familiar with artificial intelligence platforms like ChatGPT, it’s only a matter of time before patients turn to these tools seeking a diagnosis or second opinion, but it’s hard to imagine how AI will ever replicate that gut feeling honed by sitting at the bedside and placing our hands on thousands of patients.

Writing in STAT News, Dr Craig Spencer says:

Change is already on the horizon, with ChatGPT having passed the United States Medical Licensing Exams, the series of standardised tests required for medical licensure in the US. And recently the New England Journal of Medicine announced NEJM AI, a whole new journal devoted fully to artificial intelligence in clinical practice.

These and many other developments have left many wondering (and sometimes worrying) what role AI will have in the future of healthcare.

It’s already predicting how long patients will stay in the hospital, denying insurance claims, and supporting pandemic preparedness efforts.

While there are areas within medicine ripe for the assistance of AI, any assertion that it will replace healthcare providers or make our roles less important is pure hyperbole.

Recently, I had an encounter that revealed one of the limitations for AI at the patient’s bedside, now and perhaps even in the future.

While working in the emergency room, I saw a woman with chest pain. She was relatively young without any significant risk factors for heart disease. Her ECG was perfect. Her story and physical exam weren’t particularly concerning for an acute cardiac event, either. And the blood tests we sent – including one that detects damage to heart muscle from a heart attack – were all normal.

Based on nearly every algorithm and clinical decision rule that providers like me use to determine next steps in care of cases like this, my patient was safe for discharge.

But something didn’t feel right. I’ve seen thousands of similar patients in my career. Sometimes subtle signs can suggest everything isn’t OK, even when the clinical workup is reassuringly normal.

It’s hard to say exactly what tipped me off that day: the faint grimace on her face as I reassured her that everything looked fine, her husband saying “this just isn’t like her”, or something else entirely. But my gut instinct compelled me to do more instead of just discharging her.

When I repeated her blood tests and electrocardiogram a short while later, the results were unequivocal – she was having a heart attack. She was quickly whisked away for further care.

Most people are surprised to learn that treating patients in the emergency department involves a hefty dose of science mixed with a whole lot of art. We see some clear-cut cases, the type covered on board exams that virtually every provider would correctly diagnose and manage.

But in reality only a small percentage of the patients have “classic” black-and-white presentations. The same pathology can present in completely different ways, depending on age, sex, or medical comorbidities.

We have algorithms to guide us, but we still need to select the right one and navigate the sequence correctly despite sometimes conflicting information. Even then, they aren’t flawless.

It’s these intricacies of our jobs that might cause many providers to cast a suspicious eye at the looming overlap of artificial intelligence and the practice of medicine.

We’re not the first cohort of clinicians to wonder what role AI should play in patient care. AI has been trying to transform healthcare for over half a century.

In 1966, an MIT professor created ELIZA, the first chatbot, which allowed users to mimic a conversation with a psychotherapist. A few years later a Stanford psychiatrist created PARRY, a chatbot designed to mimic the thinking of a paranoid schizophrenic. In 1972, ELIZA and PARRY “met” in the first meeting between an AI doctor and patient. Their meandering conversation focused mostly on gambling at racetracks.

That same year work began on MYCIN, an artificial intelligence programme to help healthcare providers better diagnose and treat bacterial infections. Subsequent iterations and similar computer-assisted programmes – ONCOCIN, INTERNIST-I, Quick Medical Reference (QMR), CADUCEUS – never gained widespread use in the ensuing decades, but there’s evidence that’s finally changing.

Healthcare providers are now using artificial intelligence to tackle the Sisyphean burden of administrative paperwork, a major contributor to healthcare worker burnout. Others find it helps more empathetically communicate with their patients. And new research shows that generative AI can help expand the list of possible diagnoses to consider in complex medical cases, something many of the students and residents I work with sheepishly report using occasionally.

They all admit AI isn’t perfect. But neither is the gut instinct of healthcare providers. Clinicians are human, after all. We harbour similar assumptions and stereotypes as the general public.

This is clearly evident in the racial biases in pain management and the disparities in maternal health outcomes. Further, the sentiment among many clinicians that “more is better” often leads to a cascade of over-testing, incidental findings and out-of-control costs.

So even if AI won’t replace us, there are ways it could make us better providers. The question is how do we best combine AI and clinical acumen to improve patient care.

AI’s future in health care brings many important questions, too: for medical trainees who rely on it earlier in their training, what impact will it have on long-term decision-making and clinical skill development? How and when will providers be required to inform patients that AI was involved in their care? And perhaps most importantly now, how do we ensure privacy as these tools are more embedded in patient care?

When I started medical school two decades ago, my training programme required the purchase of a PalmPilot, a personal handheld computer. We were told these devices were necessary to prepare us to enter medicine at a time of cutting-edge innovation.

After I struggled with software and glitches, mine stayed in my bag for years. The promise that this technology would make me a better provider never translated to patient care.

In a 1988 interview on the promise of AI in healthcare in the Educational Researcher, Randolph Miller – a future president of the American College of Medical Informatics – predicted that artificial intelligence programmes for solving complex problems and aiding decision-making “probably will do wonders in the real worlds of medicine and teaching, but only as supplements to human expertise”.

Thirty-five years and several AI winters later, this remains true.

It seems all but certain that AI will play a substantial role in the future of healthcare, and it can help providers do our jobs better. It may even help us diagnose those heart attacks that even experienced clinicians can sometimes miss.

But until AI can develop a gut feeling, honed by working with thousands of patients, a few near-misses, and some humbling cases that stick with you, we’ll need the healing hands of real providers. And that might be forever.

Craig Spencer is a public-health professor and emergency-medicine physician at Brown University, USA.

STAT News article – AI can’t replicate this key part of practicing medicine (Open access)

See more from MedicalBrief archives:

ChatGPT tops doctors when it comes to bedside manner – US study

The risks of ChatGPT in healthcare

AI outperforms humans in creating cancer treatments — but doctors balk