A new artificial intelligence (AI) tool from the UK Institute of Cancer Research scans tumour cells to pick out women with especially aggressive ovarian cancer. Separately, in the US, the National Institutes of Health and Global Good have developed an algorithm to accurately identify precancerous changes in the cervix requiring medical attention.

A new artificial intelligence (AI) tool from the UK Institute of Cancer Research scans tumour cells to pick out women with especially aggressive ovarian cancer. Separately, in the US, the National Institutes of Health and Global Good have developed an algorithm to accurately identify precancerous changes in the cervix requiring medical attention.

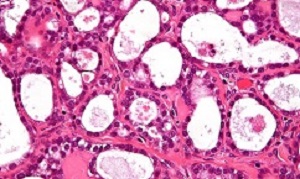

A team at the Institute of Cancer Research created an artificial intelligence (AI) tool that looks for clusters of cells within tumours with misshapen nuclei – the control centres within each cell. Women identified with these clusters of shape-shifting cells had extremely aggressive disease – with only 15% surviving for five years or more, compared with 53% for other patients with the disease.

The researchers found that having misshapen nuclei was an indication that the DNA of cancer cells had become unstable – and believe it could in future help doctors to select the best treatment for each patient.

Cancers with misshapen cell nuclei had hidden weaknesses in their ability to repair DNA, which could make them susceptible to drugs called PARP inhibitors or platinum chemotherapy. The researchers also found that immune cells were not able to move into the clusters of cells with misshapen nuclei, which suggests that cancers with these clusters are better at evading the immune system.

Understanding this immune escape mechanism could help develop new forms of immunotherapy to combat ovarian cancer.

Scientists at The Institute of Cancer Research (ICR) applied their powerful new computer tool to automatically analyse tissue samples from 514 women with ovarian cancer – together looking at nearly 150m cells. The study funded by the ICR itself, used AI to look at the shape and spatial distribution of ovarian cancer cells and their surroundings.

The researchers found that tumours containing clusters of cells whose nuclei varied highly in shape had lower levels of activity of key DNA repair genes, including BRCA1. The test could be used to pick out tumours with lower levels of activity of DNA repair genes, even in cases where the genetic code of the BRCA genes remains intact. These hidden DNA repair defects would be overlooked when only testing for faults in DNA repair genes.

The presence of clusters was associated with even worse prognosis than mutations in the BRCA genes. The team at the ICR – a research institute and a charity – also found that the clusters had higher levels of a protein called galectin-3, which is known to cause key immune cells to die.

The researchers believe that galectin-3 could represent a brand new escape route from the immune system in ovarian cancer and a potential target for new immunotherapies – although further research is needed.

Dr Yinyin Yuan, team leader in computational pathology at the ICR said: “We have developed a simple new computer test that can identify women with very aggressive ovarian cancer so treatment can be tailored for their needs.

“Using this new test gives us a way of detecting tumours with hidden weaknesses in their ability to repair DNA that wouldn’t be identified through genetic testing. It could be used alongside gene testing to identify women who could benefit from alternative treatment options that target DNA repair defects, such as PARP inhibitors.

“Our test also revealed that ovarian tumours with these clusters of misshapen nuclei have evolved a new way of evading the immune system, and it might be possible to target this mechanism with new forms of immunotherapy.”

Professor Paul Workman, CEO of The Institute of Cancer Research, London, said: “This extremely clever new study has shown that by using AI to analyse routinely taken biopsy samples, it is possible to uncover visual clues that reveal how aggressive an ovarian tumour is.

“What makes this test even more exciting is its ability to pick out in a new and different way those women whose tumours have weaknesses in DNA repair – who might therefore respond to treatments that target these weaknesses.”

Abstract

How tumor microenvironmental forces shape plasticity of cancer cell morphology is poorly understood. Here, we conduct automated histology image and spatial statistical analyses in 514 high grade serous ovarian samples to define cancer morphological diversification within the spatial context of the microenvironment. Tumor spatial zones, where cancer cell nuclei diversify in shape, are mapped in each tumor. Integration of this spatially explicit analysis with omics and clinical data reveals a relationship between morphological diversification and the dysregulation of DNA repair, loss of nuclear integrity, and increased disease mortality. Within the Immunoreactive subtype, spatial analysis further reveals significantly lower lymphocytic infiltration within diversified zones compared with other tumor zones, suggesting that even immune-hot tumors contain cells capable of immune escape. Our findings support a model whereby a subpopulation of morphologically plastic cancer cells with dysregulated DNA repair promotes ovarian cancer progression through positive selection by immune evasion.

Authors

Andreas Heindl, Adnan Mujahid Khan, Daniel Nava Rodrigues, Katherine Eason, Anguraj Sadanandam, Cecilia Orbegoso, Marco Punta, Andrea Sottoriva, Stefano Lise, Susana Banerjee, Yinyin Yuan

A research team led by investigators from the National Institutes of Health and Global Good has developed a computer algorithm that can analyse digital images of a woman's cervix and accurately identify precancerous changes that require medical attention. This artificial intelligence (AI) approach, called automated visual evaluation, has the potential to revolutionise cervical cancer screening, particularly in low-resource settings.

To develop the method, researchers used comprehensive datasets to "train" a deep, or machine, learning algorithm to recognise patterns in complex visual inputs, such as medical images. The approach was created collaboratively by investigators at the National Cancer Institute (NCI) and Global Good, a project of Intellectual Ventures, and the findings were confirmed independently by experts at the National Library of Medicine (NLM).

"Our findings show that a deep learning algorithm can use images collected during routine cervical cancer screening to identify precancerous changes that, if left untreated, may develop into cancer," said Dr Mark Schiffman, of NCI's division of cancer epidemiology and genetics, and senior author of the study. "In fact, the computer analysis of the images was better at identifying precancer than a human expert reviewer of Pap tests under the microscope (cytology)."

The new method has the potential to be of particular value in low-resource settings. Health care workers in such settings currently use a screening method called visual inspection with acetic acid (VIA). In this approach, a health worker applies dilute acetic acid to the cervix and inspects the cervix with the naked eye, looking for "aceto whitening," which indicates possible disease. Because of its convenience and low cost, VIA is widely used where more advanced screening methods are not available. However, it is known to be inaccurate and needs improvement.

Automated visual evaluation is similarly easy to perform. Health workers can use a cell phone or similar camera device for cervical screening and treatment during a single visit. In addition, this approach can be performed with minimal training, making it ideal for countries with limited health care resources, where cervical cancer is a leading cause of illness and death among women.

To create the algorithm, the research team used more than 60,000 cervical images from an NCI archive of photos collected during a cervical cancer screening study that was carried out in Costa Rica in the 1990s. More than 9,400 women participated in that population study, with follow up that lasted up to 18 years. Because of the prospective nature of the study, the researchers gained nearly complete information on which cervical changes became pre-cancers and which did not. The photos were digitised and then used to train a deep learning algorithm so that it could distinguish cervical conditions requiring treatment from those not requiring treatment.

Overall, the algorithm performed better than all standard screening tests at predicting all cases diagnosed during the Costa Rica study. Automated visual evaluation identified pre-cancer with greater accuracy (AUC=0.91) than a human expert review (AUC=0.69) or conventional cytology (AUC=0.71). An AUC of 0.5 indicates a test that is no better than chance, whereas an AUC of 1.0 represents a test with perfect accuracy in identifying disease.

"When this algorithm is combined with advances in HPV vaccination, emerging HPV detection technologies, and improvements in treatment, it is conceivable that cervical cancer could be brought under control, even in low-resource settings," said Maurizio Vecchione, executive vice president of Global Good.

The researchers plan to further train the algorithm on a sample of representative images of cervical precancers and normal cervical tissue from women in communities around the world, using a variety of cameras and other imaging options. This step is necessary because of subtle variations in the appearance of the cervix among women in different geographic regions. The ultimate goal of the project is to create the best possible algorithm for common, open use.

Abstract

Background: Human papillomavirus vaccination and cervical screening are lacking in most lower resource settings, where approximately 80% of more than 500 000 cancer cases occur annually. Visual inspection of the cervix following acetic acid application is practical but not reproducible or accurate. The objective of this study was to develop a “deep learning”-based visual evaluation algorithm that automatically recognizes cervical precancer/cancer.

Methods: A population-based longitudinal cohort of 9406 women ages 18–94 years in Guanacaste, Costa Rica was followed for 7 years (1993–2000), incorporating multiple cervical screening methods and histopathologic confirmation of precancers. Tumor registry linkage identified cancers up to 18 years. Archived, digitized cervical images from screening, taken with a fixed-focus camera (“cervicography”), were used for training/validation of the deep learning-based algorithm. The resultant image prediction score (0–1) could be categorized to balance sensitivity and specificity for detection of precancer/cancer. All statistical tests were two-sided.

Results: Automated visual evaluation of enrollment cervigrams identified cumulative precancer/cancer cases with greater accuracy (area under the curve [AUC] = 0.91, 95% confidence interval [CI] = 0.89 to 0.93) than original cervigram interpretation (AUC = 0.69, 95% CI = 0.63 to 0.74; P < .001) or conventional cytology (AUC = 0.71, 95% CI = 0.65 to 0.77; P < .001). A single visual screening round restricted to women at the prime screening ages of 25–49 years could identify 127 (55.7%) of 228 precancers (cervical intraepithelial neoplasia 2/cervical intraepithelial neoplasia 3/adenocarcinoma in situ [AIS]) diagnosed cumulatively in the entire adult population (ages 18–94 years) while referring 11.0% for management.

Conclusions: The results support consideration of automated visual evaluation of cervical images from contemporary digital cameras. If achieved, this might permit dissemination of effective point-of-care cervical screening.

Authors

Liming Hu, David Bell, Sameer Antani, Zhiyun Xue, Kai Yu, Matthew P Horning, Noni Gachuhi, Benjamin Wilson, Mayoore S Jaiswal, Brian Befano, L Rodney Long, Rolando Herrero, Mark H Einstein, Robert D Burk, Maria Demarco, Julia C Gage, Ana Cecilia Rodriguez, Nicolas Wentzensen, Mark Schiffman

[link url="https://www.icr.ac.uk/news-archive/new-ai-shapeshift-test-identifies-women-with-very-high-risk-ovarian-cancer"]The Institute of Cancer Research material[/link]

[link url="https://www.nature.com/articles/s41467-018-06130-3"]Nature Communications abstract[/link]

[link url="https://www.cancer.gov/news-events/press-releases/2019/deep-learning-cervical-cancer-screening"]NIH material[/link]

[link url="https://academic.oup.com/jnci/advance-article/doi/10.1093/jnci/djy225/5272614"]Journal of the National Cancer Institute abstract[/link]