An ultra-compact camera — the size of a coarse grain of salt — for use in the human body, has been developed at the universities of Princeton and Washington. It overcomes previous approaches that captured fuzzy, distorted images with limited fields of view.

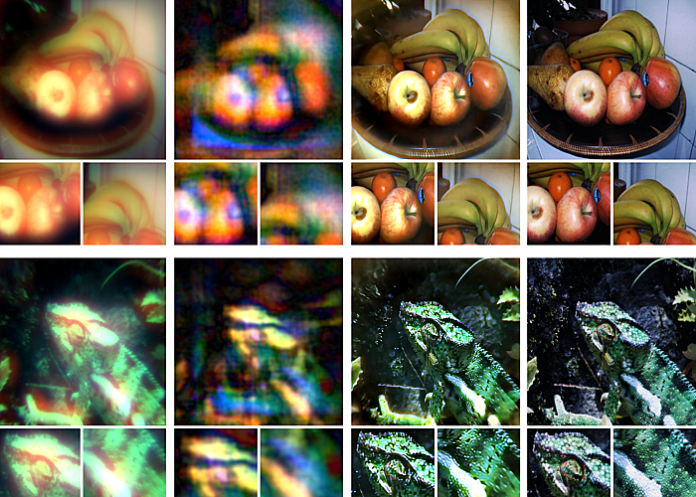

The new system can produce crisp, full- colour images on par with a conventional compound camera lens 500,000 times larger in volume, the researchers reported in a paper published in Nature Communications.

Enabled by a joint design of the camera’s hardware and computational processing, the system could enable minimally invasive endoscopy with medical robots to diagnose and treat diseases, and improve imaging for other robots with size and weight constraints. Arrays of thousands of such cameras could be used for full-scene sensing, turning surfaces into cameras.

While a traditional camera uses a series of curved glass or plastic lenses to bend light rays into focus, the new optical system relies on a technology called a metasurface, which can be produced much like a computer chip. Just half a millimetre wide, the metasurface is studded with 1.6m cylindrical posts, each roughly the size of the human immunodeficiency virus (HIV).

Each post has a unique geometry, and functions like an optical antenna. Varying the design of each post is necessary to correctly shape the entire optical wavefront. With the help of machine learning-based algorithms, the posts’ interactions with light combine to produce the highest-quality images and widest field of view for a full-colour metasurface camera developed to date.

A key innovation in the camera’s creation was the integrated design of the optical surface and the signal processing algorithms that produce the image. This boosted the camera’s performance in natural light conditions, in contrast to previous metasurface cameras that required the pure laser light of a laboratory or other ideal conditions to produce high-quality images, said Felix Heide, the study’s senior author and an assistant professor of computer science at Princeton.

The researchers compared images produced with their system to the results of previous metasurface cameras, as well as images captured by a conventional compound optic that uses a series of six refractive lenses. Aside from a bit of blurring at the edges of the frame, the nano-sized camera’s images were comparable to those of the traditional lens setup, which is more than 500,000 times larger in volume.

Other ultracompact metasurface lenses have suffered from major image distortions, small fields of view, and limited ability to capture the full spectrum of visible light, referred to as RGB imaging because it combines red, green and blue to produce different hues.

“It’s been a challenge to design and configure these little microstructures to do what you want,” said Ethan Tseng, a computer science Ph.D. student at Princeton who co-led the study. “For this specific task of capturing large field of view RGB images, it’s challenging because there are millions of these little microstructures, and it's not clear how to design them in an optimal way.”

Co-lead author Shane Colburn tackled this challenge by creating a computational simulator to automate testing of different nano-antenna configurations. Because of the number of antennas and the complexity of their interactions with light, this type of simulation can use “massive amounts of memory and time”, said Colburn. He developed a model to efficiently approximate the metasurfaces’ image production capabilities with sufficient accuracy.

Colburn conducted the work as a PhD student at the University of Washington Department of Electrical & Computer Engineering (UW ECE), where he is now an affiliate assistant professor. He also directs system design at Tunoptix, a Seattle-based company that is commercialising metasurface imaging technologies. Tunoptix was cofounded by Colburn's graduate adviser Arka Majumdar, an associate professor at the University of Washington in the ECE and physics departments and a co-author of the study.

Co-author James Whitehead, a PhD student at UW ECE, fabricated the metasurfaces, which are based on silicon nitride, a glass-like material compatible with standard semiconductor manufacturing methods used for computer chips, meaning that a given metasurface design could be easily mass-produced at lower cost than the lenses in conventional cameras.

“Although the approach to optical design is not new, this is the first system that uses a surface optical technology in the front end and neural-based processing in the back,” said Joseph Mait, a consultant at Mait-Optik and a former senior researcher and chief scientist at the US Army Research Laboratory.

“The significance of the published work is completing the Herculean task to jointly design the size, shape and location of the metasurface’s million features and the parameters of the post-detection processing to achieve the desired imaging performance,” added Mait, who was not involved in the study.

Heide and his colleagues are now working to add more computational abilities to the camera itself. Beyond optimising image quality, they would like to add capabilities for object detection and other sensing modalities relevant for medicine and robotics.

Heide also envisions using ultracompact imagers to create “surfaces as sensors”.

“We could turn individual surfaces into cameras with ultra-high resolution, so you wouldn’t need three cameras on the back of your phone anymore, but the whole back of your phone would become one giant camera. We can think of completely different ways to build devices in the future,” he said.

The work was supported in part by the National Science Foundation, the US Department of Defense, the UW Reality Lab, Facebook, Google, Futurewei Technologies, and Amazon.

Study details

Neural nano-optics for high-quality thin lens imaging

Ethan Tseng, Shane Colburn, James Whitehead, Luocheng Huang, Seung-Hwan Baek, Arka Majumdar, Felix Heide.

Published in Nature Communications on 29 November 2021;

Abstract

Nano-optic imagers that modulate light at sub-wavelength scales could enable new applications in diverse domains ranging from robotics to medicine. Although metasurface optics offer a path to such ultra-small imagers, existing methods have achieved image quality far worse than bulky refractive alternatives, fundamentally limited by aberrations at large apertures and low f-numbers. In this work, we close this performance gap by introducing a neural nano-optics imager. We devise a fully differentiable learning framework that learns a metasurface physical structure in conjunction with a neural feature-based image reconstruction algorithm. Experimentally validating the proposed method, we achieve an order of magnitude lower reconstruction error than existing approaches. As such, we present a high-quality, nano-optic imager that combines the widest field-of-view for full-colour metasurface operation while simultaneously achieving the largest demonstrated aperture of 0.5 mm at an f-number of 2.

Discussion

In this work, we present an approach for achieving high-quality, full-color, wide FOV imaging using neural nano-optics. Specifically, the proposed learned imaging method allows for an order of magnitude lower reconstruction error on experimental data than existing works. The key enablers of this result are our differentiable meta-optical image formation model and novel deconvolution algorithm. Combined together as a differentiable end-to-end model, we jointly optimize the full computational imaging pipeline with the only target metric being the quality of the deconvolved RGB image—sharply deviating from existing methods that penalize focal spot size in isolation from the reconstruction method.

We have demonstrated the viability of meta-optics for high-quality imaging in full-color, over a wide FOV. No existing meta-optic demonstrated to date approaches a comparable combination of image quality, large aperture size, low f-number, wide fractional bandwidth, wide FOV, and polarization insensitivity (see Supplementary Notes 1 and 2), and the proposed method could scale to mass production. Furthermore, we demonstrate image quality on par with a bulky, six-element commercial compound lens even though our design volume is 550,000× lower and utilizes a single metasurface.

We have designed neural nano-optics for a dedicated, aberration-free imaging task, but we envision extending our work towards flexible imaging with reconfigurable nanophotonics for diverse tasks, ranging from an extended depth of field to classification or object detection tasks. We believe that the proposed method takes an essential step towards ultra-small cameras that may enable novel applications in endoscopy, brain imaging, or in a distributed fashion on object surfaces.

Nature article – Neural nano-optics for high-quality thin lens imaging (Open access)

See more from MedicalBrief archives:

World-first for Cape Town in endoscopic gynaecology

Using artificial intelligence to identify colorectal adenomas

Less invasive and safer surgical technique for lung cancer

Africa's first robotic neurosurgery visualisation technology now operational